Human Nature and Managing Technology Teams

September 09, 2011 at 01:45 PMManaging technology groups can be especially challenging. I attribute this to the way that technology attracts personality types that aren't as common in traditional business roles. Specifically I am talking about the type of people that founded and continue to support the stereotypes of the technologist - the introverted, over-calm, over-ego'ed nerds and geeks.

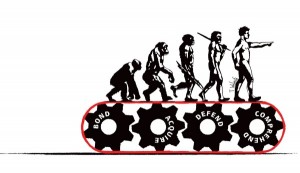

Darwin's Theory of Human Behavior suggests that we are all motivated by four drives: to acquire, to defend what we have acquired, to understand/comprehend the world around us, and to bond socially.

Technologists are a little bit of a different breed. Part of what motivates technology people is making it better: the interface, their programming code, the world. They want to know they are making a difference, even if it's at a personal and not global level. I don't think this specific motivation can be attributed to just one of the Darwin drives - but works across all of them. Making it better is a quest to acquire new knowledge or capabilities, better-preserve existing knowledge/capabilities, yield new or clearer understanding, and gain socially through being recognized for the accomplishment.

Harvard researchers performed a survey among the workforce to test Darwin's Theory. Their results were published in Harvard Business review and this month in Harvard Magazine. The study showed that the four drives explain about 60% of motivation for employees, but more importantly that if just one drive wasn't satisfied in the person's job, it pulled down their job satisfaction in all other categories. The best results correlated with all four drives being fulfilled simultaneously.

Computer systems are logical things and that is a natural attraction for people who fall into the NT range of the Myers-Briggs personality type. Logic and strategy are strengths of NTs, and they value truth, knowledge, competence and autonomy.

Typical technology people highly respect authority figures that they see as deserving of their positions. However, Myers-Brings and Carl Jung point out that NT thinkers disdain poor and unmerited leadership. This is mainly due to the fact that NTs have a natural leadership ability and tendency, but they are introverted and reserved. They prefer to work behind the scenes but can emerge as effective leaders when they feel that the business objective would be failed otherwise.

A big key to motivating technology teams that many managers miss is filling them in on the big picture. This goes a long way with the team because it allows them to plan for the intermedite and long-term. Without understanding the strategy of the company they work for, technologists will see their leadership as erratic and ineffective.

Recognition is another area that is often overlooked. This isn't just an "atta boy".

Your technology team should be looked at and even touted as an asset where appropriate. Buy them lunches, give them perks, and let them take time off outside of the company policy if they work overtime.

Like many things, communication and understanding are keys to success in managing technology teams. I create highly effective teams by hiring competent people and laying out the strategy as well as the technology philosophy. This gives employees a compass on which to base their decisions and solutions, ensuring their efforts and contributions are in-line with the needs of the organization.

Permanent Link — Posted in Technology ManagementCloud Architecture Best Practices

August 31, 2011 at 09:33 AM"Plan for failure" is not a new mantra when it comes to information technology. Evaluating the worst case scenario is part of defining system requirements in many organizations. The mistake that many are making when they start to implement cloud is that they don't re-evaluate their existing architecture and the economics around redundancy.

All organizations make trade-offs between cost and risk. Having truly fully redundant architecture at all levels of the system is usually seen as unduly expensive. Big areas of exposure like databases and connectivity get addressed but some risk is usually accepted.

One of the things that change with cloud architectures is that cost and risk equation. The combination of not taking that into account and the assumption that fail-over is a built-in component of cloud is what leads to downtime.

Brian Heaton has published a great article, Securing Data in the Cloud, that walks through the Amazon cloud regional outage this past April. It shows contrasting examples of organizations that planned poorly and were affected and those who planned well and weren't impacted. It also lists six great rules for managing the risk of cloud outages:

1. Incorporate failover for all points in the system. Every server image should be deployable in multiple regions and data centers, so the system can keep running even if there are outages in more than one region.2. Develop the right architecture for your software. Architectural nuances can make a huge difference to a system’s failover response. A carefully created system will keep the database in sync with a copy of the database elsewhere, allowing for a seamless failover.

3. Carefully negotiate service-level agreements. SLAs should provide reasonable compensation for the business losses you may suffer from an outage. Simply receiving prorated credit for your hosting costs during downtime won’t compensate for the costs of a large system failure.

4. Design, implement and test a disaster recovery strategy. One component of such a plan is the ability to draw on resources like failover instances, at a secondary provider. Provisions for data recovery and backup servers are also essential. Run simulations and periodic testing to ensure your plans will work.

5. In coding your software, plan for worst-case scenarios. In every part of your code, assume that the resources it needs to work might become unavailable, and that any part of the environment could go haywire. Simulate potential problems in your code, so that the software will respond correctly to cloud outages.

6. Keep your risks in perspective, and plan accordingly. In cases where even a brief downtime would incur massive costs or impair vital government services, multiple redundancies and split-second failover can be worth the investment, but it can be quite costly to eliminate the risk of a brief failure.

Another thing I see in that article and many others is that "cloud" doesn't mean "easy". Most organizations are writing middle-ware to work between their established processes/procedures and their cloud deployments. Part of this is that cloud enforces good virtualization practices and I suspect many IT shops have taken short cuts here and there. There are cloud-centric projects concentrating on configuration and deployment management, but not one size fits all - so expect to do some custom development as you migrate to the cloud.

References: Securing Data in the Cloud, by Brian Heaton on Government Technology

Permanent Link — Posted in Cloud ComputingDisable Akonadi in KDE 4.7

August 22, 2011 at 10:02 AMIn recent KDE updates, the PIM suite (kmail, kontact) is ...uh... not really working for me. I don't know if it is truly broken or just requires a different approach and understanding.

This has me temporarily using Thunderbird for mail until I can get back to using my beloved kmail. Unfortunately, Akonadi, the engine behind the PIM suite, still insists on going out and fetching mail and doing other things.

Martin Schlander has put up a great post on how to disable Akonadi and I haven't had any messages pop up after following the steps he lists.

Permanent Link — Posted in Arch LinuxUnderstanding Cloud Computing Vulnerabilities

August 19, 2011 at 09:09 AMDiscussions about cloud computing security often fail to distinguish general issues from cloud-specific issues.

Here is a great overview from Security & Privacy IEEE magazine of common IT vulnerabilities and how they are impacted by the new cloud paradigm.

The article starts off defining vulnerability in general and then goes on to establish the vulnerabilities that are inherent in cloud computing models.

It really boils down to access:

Of all these IAAA vulnerabilities, in the experience of cloud service providers, currently, authentication issues are the primary vulnerability that puts user data in cloud services at risk

...which is really no different than anything in traditional IT.

via InfoQ: Understanding Cloud Computing Vulnerabilities.

Permanent Link — Posted in Cloud Computing, SecurityHow The Cloud Changes Disaster Recovery

July 26, 2011 at 03:44 PMData Center Knowledge has posted a great article illuminating the effect that cloud computing is having on the economics of disaster recovery (DR) for information technology. Having fast DR used to mean adding a large considerable expense to your IT budget in order to "duplicate" what you have.

Using cloud technologies not only is this less expensive, but is a great first step towards transitioning IT infrastructure into the cloud paradigm.

http://www.datacenterknowledge.com/archives/2011/07/26/how-the-cloud-changes-disaster-recovery/

Permanent Link — Posted in Cloud Computing